Recently I started refreshing and expanding on my knowledge of digital design. But I found that the way I learned to design logic for FPGAs in a HDL (VHDL in my case) seems lacking in a way. At university, we were dependent on Xilinx Vivado, a proprietary GUI program to write, test, synthesize, and implement HDL. Version control was done by sending zips with VHDL files back and forth. Verification was done by writing testbenches in VHDL and, at best, these loaded in files with input stimuli and the expected outputs, but mostly, verifying the outputs was done by viewing the traces from the testbenches, which, weirdly enough, felt quicker.

While getting somewhat familiar with modern software design, I realized that there are better ways to write, test and share text files that deterministically describe some sort of behavior. Concepts like unit tests, version control, and CI (Continuous Integration) seem to also fit hardware design in HDL.

using git for version control and collaboration, writing tests, adding the tests to a CI pipeline so that they verify each new version that is pushed, etc.

This post is about my attempt to bring these concepts to hardware design.

The Design

First I need a somewhat complicated design to test things out with. It needs to have some complexity in order to feel the advantages from formal verification, but it can’t be too complex so that I don’t lose myself in the design. The design to test the aforementioned concepts with, will be a Wishbone-UART interface.

The Wishbone-UART interface

This will be the design that will be used to test out the workflow

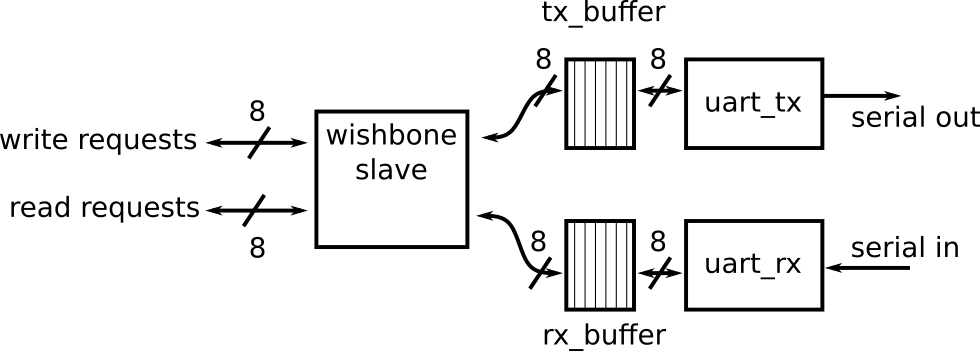

This module will act as a wishbone slave on one side; listening to read and write requests.

A write request is answered by writing a byte to the tx_buffer that then will be transmitted by a UART transmitter.

A read request will check the rx_buffer for a byte received over UART and return that byte if there is one.

Unit Testing

The first thing to do is to streamline our testing methodology a bit. What I often found lacking with the method of writing testbenches in a HDL is that code-reuse doesn’t seem to be that straight-forward. I often found myself rewriting code, while the testbenches often had a lot of functionality in common.

The first tool to introduce here is verilator. It is an open-source tool that calls itself the “fastest Verilog/SystemVerilog simulator”. It works by parsing the verilog or systemverilog code and translating it to C++ (that is quite similar or equal to systemC). But rather following the Verilator examples of still writing testbenches in HDL and adding a minimal C++ wrapper, we’ll write C++ unit tests that link with our verilog modules, module per module.

Not only does driving our modules in C++ directly add the possibility of using a unit testing framework (like criterion), it also allows us to use some of the nice things that the C++ language offers. Things like inheritance and templates allow for more code-reuse. In C++ one can write the basic testbench functionality in a base template. The template class is where the verilog module class, that verilator generated, can fit in. Each module’s derived testbench class can then fill in this template and add its own functionality.

Each module has its own testbench class and its own test suite, in which the testbench class is instantiated as a fixture. Different kinds of edge case or usage stimuli can be validated in their own tests. Each test can then optionally write out its own trace for debugging purposes.

Formal Verification

But, while this approach feels more comfortable than what I was used to, it’s still a bit of a hassle to write the tests and find the correct stimuli to check. There’s also the doubt whether you considered all the possibilities. Maybe there’s some erroneous behavior in one of the modules that you haven’t accounted for.

This is where formal verification comes in. Long story short: formal verification is where a tool tries to mathematically prove (or disprove) whether a module follows formal properties or is equivalent to a simpler reference model.

A good example that shows the difference between formal verification and simulation-based validation is to see how, with both methodologies, you would try to find out whether (x + 1)^2 = x^2 + 2x + 1. With simulation-based validation, you’ll need to find a set of numbers that are then filled in in both the RHS equation and the LHS equation. If both sides are equal for all numbers in the set you built, you assume that both sides are equal. With formal validation, a tool will try to prove that both sides are equal using mathematical properties, like (x + 1)^2 = (x+1)(x+1), (x + 1)(x + 1) = x(x + 1) + 1(x + 1), etc.

Lets take the Wishbone bus interface in our design.

Wishbone is a bus standard.

Our wishbone slave must follow certain rules that are defined by the standard.

We can boil down these rules to a combination of assume and assert statements.

A formal verification tool will try to find whether, taking certain assumptions into account, the assert statements can or cannot be violated.

If an assert statement fails, the tool will produce an error and give you a trace that ends with the failure.

The formal verification engine that I use is SymbiYosys (sby).

This tool works with the open-source synthesis suite Yosys for things like extracting the state-machines out of the verilog designs it needs to validate.

The way I’ll use it is in prove mode with the smtbmc engine which uses the yices smt solver in the back-end.

This basically proves that our wishbone slave module will never end up in a bad state, or misbehave on the wishbone bus.

While working with sby, I started feeling that a tool like this can, in some cases, replace writing (unit) tests. When you’re familiar with it, I reckon that writing what your design has to do in formal properties is faster than writing separate tests with the stimuli that show correctness and test edge cases. Tools like sby basically find these stimuli for you. The downside is that these tools could take longer to run.

CI Pipelines

When working with something like a remote git repository, it might be handy to know whether the remote master branch, or branches that want to merge with master, pass all tests. Especially when working with a big team, or with open-source projects, this can be really handy. That’s why CI is used by software developers, so why not use it for this kind of hardware as well.

This is where using open-source CLI programs starts to seriously pay off. Because the programs are free and can run headless, they can be easily put in a docker container in which CI runners can run tests.

I made a quick-and-dirty container that includes the previously mentioned software needed to run the tests.

It is based on the Ubuntu container.

Unfortunately, some programs in the official repositories were a bit too much out-of-date.

The version in the repos of verilator didn’t have the needed functionality, so it was built from source.

Yosys and SymbiYosys were also built from source.

The CI pipeline consists of three stages at the moment.

The first stage is a build stage. This stage Verilates the Verilog design sources and builds the C++ unit tests. Running a linter to check the correctness of all verilog sources, and warning for things like possibly unwanted latches, could also fit in this stage.

The second and third stage run the tests. First the unit tests. These are faster than the formal verification. If these error out, there’s something really wrong with the design. But if these pass, we can continue to the formal verification stage. This stage takes a bit longer, since it will run in prove mode. If this stage passes, the design follows the formal description. Note that does not mean that the design is 100% correct, it just means that it is very likely to be 100% correct. The formal description might contain errors itself, but you are less likely to make errors in this description than in the actual design.

After the first three stages, one could also add some static timing analysis together with Yosys synthesis to get a first estimate whether or not the design will work with a minimum clock frequency.

You could also add some sort of implementation test, checking whether the design will actually fit on the FPGA. Off course, for this implementation test you’re a bit limited. You could use NextPNR for this, but this tool only works for a limited set of FPGAs.

Conclusion

Open-source hardware design tools allow for interesting, modern workflows. They’re flexible and easy to install, which makes it so that they work well with things like git and CI. The downside is unfortunately that, at the moment, they can be a bit limited, especially when arriving at the end of the design process and you’re thinking of implementation, technology mapping and the like.

The project’s source can be found on gitlab so that others can take inspiration from it.

Credits

A lot of inspiration came from the zipcpu blog. This blog also contain some resources to learn formal verification with Yosys and SymbiYosys.